Stanford researchers built a new prompting technique!

By adding ~20 words to a prompt, it:

- boosts LLM’s creativity by 1.6-2x

- raises human-rated diversity by 25.7%

- beats fine-tuned model without any retraining

- restores 66.8% of LLM’s lost creativity after alignment

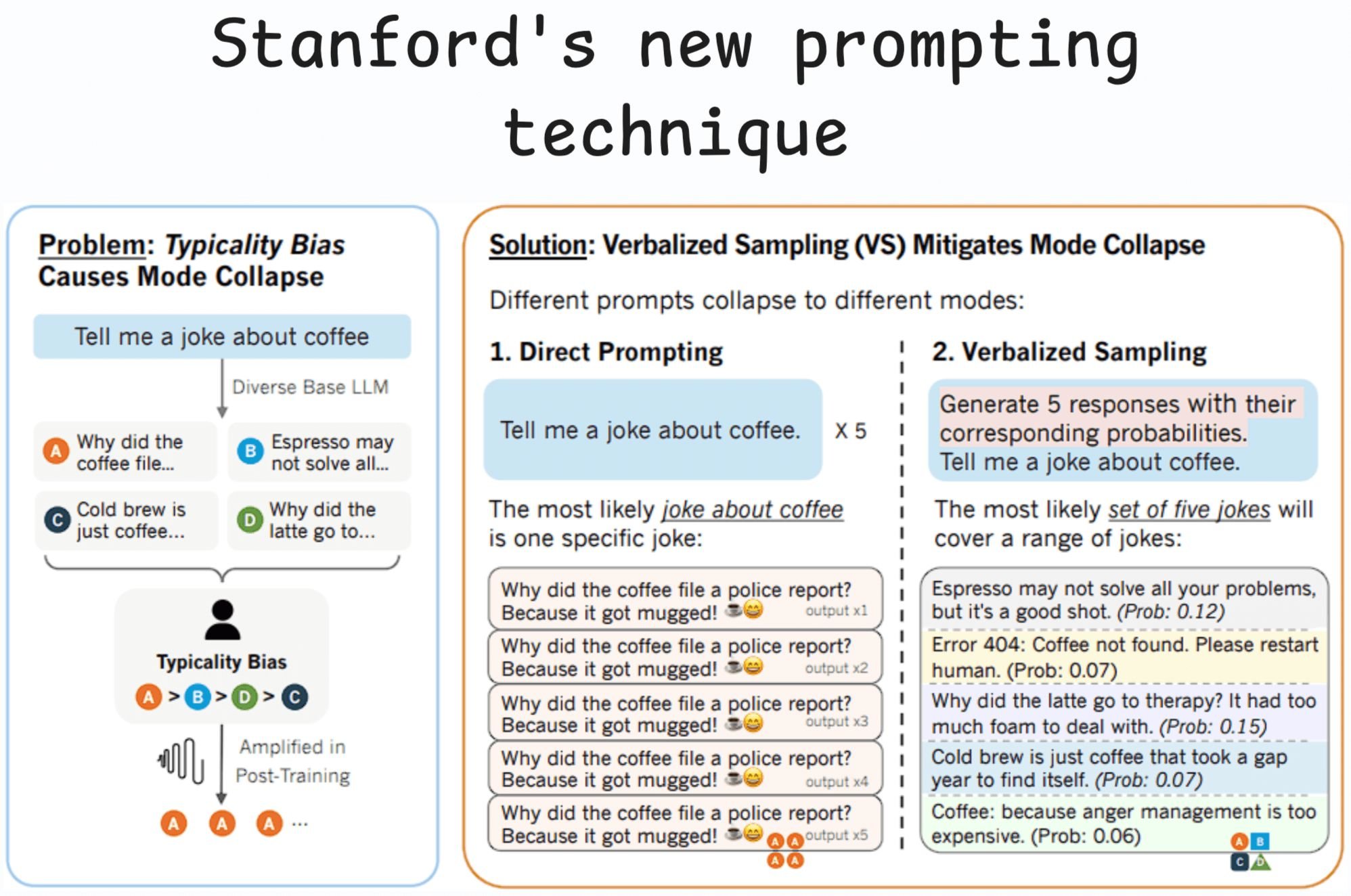

Post-training alignment methods, such as RLHF, are designed to make LLMs helpful and safe.

However, these methods unintentionally cause a significant drop in output diversity (called mode collapse).

When an LLM collapses to a mode, it starts favoring a narrow set of predictable or stereotypical responses over other outputs.

This happens because the human preference data used to train the LLM has a hidden flaw called typicality bias.

Here’s how this happens:

- Annotators rate different responses from an LLM, and later, the LLM is trained using a reward model to mimic these human preferences.

- However, annotators naturally tend to favor answers that are more familiar, easy to read, and predictable. This is the typicality bias.

So even if a new, creative answer is just as good, the human’s preference often leans toward the common one.

Due to this, the reward model boosts responses that the original (pre-aligned) model already considered likely.

This aggressively sharpens the LLM’s probability distribution, collapsing the model’s creative output to one or two dominant, highly predictable responses.

That said, it is not an irreversible effect, and the LLM still has two personalities after alignment:

- The original model that learned the rich possibilities during pre-training.

- The safety-focused, post-aligned model.

Verbalized sampling (VS) solves this.

It is a training-free prompting strategy introduced to circumvent mode collapse and recover the diverse distribution learned during pre-training.

The core idea of verbalized sampling is that the prompt itself acts like a mental switch.

When you directly prompt “Tell me a joke”, the aligned personality immediately takes over and outputs the most reinforced answer.

But in verbalized sampling, you prompt it with “Generate 5 responses with their corresponding probabilities. Tell me a joke.”

In this case, the prompt does not request an instance, but a distribution.

This causes the aligned model to talk about its full knowledge and is forced to utilize the diverse distribution it learned during pre-training.

This way, the model taps into the broader, diverse set of ideas, which comes from the rich distribution that still exists inside its core pre-trained weights.

Verbalized sampling significantly enhances diversity by 1.6–2.1x over direct prompting, while maintaining or improving quality.

Variants like verbalized sampling-based CoT (Chain-of-Thought) and verbalized sampling-based Multi improve generation diversity even further.

Abstract #

Post-training alignment often reduces LLM diversity, leading to a phenomenon known as mode collapse. Unlike prior work that attributes this effect to algorithmic limitations, we identify a fundamental, pervasive data-level driver: typicality bias in preference data, whereby annotators systematically favor familiar text as a result of well-established findings in cognitive psychology. We formalize this bias theoretically, verify it on preference datasets empirically, and show that it plays a central role in mode collapse. Motivated by this analysis, we introduce Verbalized Sampling, a simple, training-free prompting strategy to circumvent mode collapse. VS prompts the model to verbalize a probability distribution over a set of responses (e.g., “Generate 5 jokes about coffee and their corresponding probabilities”). Comprehensive experiments show that VS significantly improves performance across creative writing (poems, stories, jokes), dialogue simulation, open-ended QA, and synthetic data generation, without sacrificing factual accuracy and safety. For instance, in creative writing, VS increases diversity by 1.6-2.1x over direct prompting. We further observe an emergent trend that more capable models benefit more from VS. In sum, our work provides a new data-centric perspective on mode collapse and a practical inference-time remedy that helps unlock pre-trained generative diversity.

Source: